Elastic Search + Ghost: Part I

This is the first part of a series of posts detailing how to set up ElasticSearch and integrate it into your Ghost blog. The aim of this series is to have search as a core feature of your blog.

Introduction

Before we begin, please ensure that

- you have (SSH) access to the server that is running your Ghost blog.

- you have the required permissions to be able to edit the Ghost source code and restart the server.

- you have root access on the server where you want to install Elastic Search (I’ve installed it on the same machine that hosts the blog)

Elastic Search is an amazing distributed, real-time search platform and is incredible at indexing a large amount to data and performing searches across it really, really fast. It’s built on top of Apache Lucene and is really good at full text searching as well. Data is stored in the JSON format and is retrieved via a RESTful query language. You can find the official documentation here.

Installing Elastic Search

I’ll be installing Elastic Search on an ubuntu 12.04 on an AWS micro instance (which also runs this blog). Installing Elastic Search is fairly simple, just follow these steps:

cd ~

sudo apt-get update

sudo apt-get install openjdk-7-jre-headless -y

wget https://download.elasticsearch.org/elasticsearch/elasticsearch/elasticsearch-1.0.1.deb

sudo dpkg -i elasticsearch-1.0.1.deb

sudo service elasticsearch startOK, so now you should have Elastic Search up and running on your machine. To check, send a cURL to localhost:9200. You should get back something like this:

{

"status": 200,

"name": "Bevatron",

"version": {

"number": "1.0.1",

"build_hash": "5c03841c42b",

"build_timestamp": "2014-02-25T15:52:53Z",

"build_snapshot": false,

"lucene_version": "4.6"

},

"tagline": "You Know, for Search"

}Your name will be different (Awesome, isn’t it?!).

Working with Elastic Search

Before we enter/update (index) data in elastic search, we need to know what an Index is and what a Type is.

An index is like a ‘database’ in a relational database. It has a mapping which defines multiple types.

An index is a logical namespace which maps to one or more primary shards and can have zero or more replica shards.

To stay focused, I’ll not be getting into what an index and a type are, you can read up on that here. As a gross simplification, consider an index to be the equivalent of a relational database and a type to the be equivalent of a table in that database.

Entering Data

Alright lets put in some sample data. I’m using a Chrome extension called Postman to make http calls.

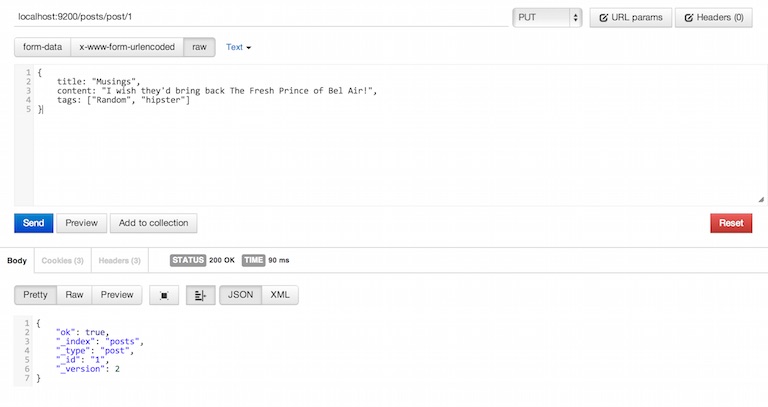

To enter data, you need to send a PUT request to http://localhost:9200///id. If the index and the type do not exist, they will be created on the fly.

The "ok": true indicates that all went well and the document was created with "id": 1. The index posts and the type post both were created on-the-fly while creating this document. Elastic Search also figured out the default schema (mapping) for the type post.

Retrieving Data

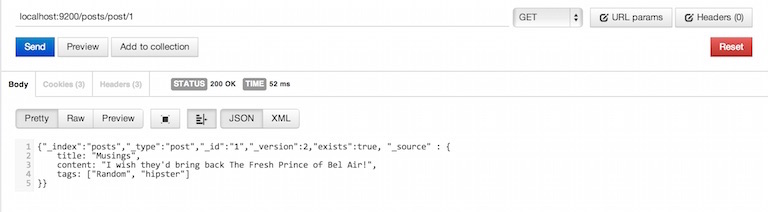

Lets retrieve the document we created in the previous step. Documents are retrieved via GET requests. The URL is: http://localhost:9200////.

Searching

The simplest way to search is via a query string parameter to the specific index or the type.

Searching for the word “mango” in all types under the index food:

http://localhost:9200/food/_search?q=mango

Searching for the word “mango”in the type fruits in the index food:

http://localhost:9200/food/fruits/_search?q=mango

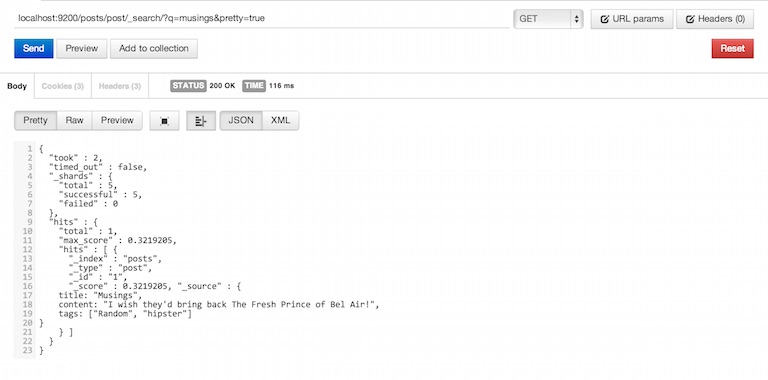

Lets search for our document:

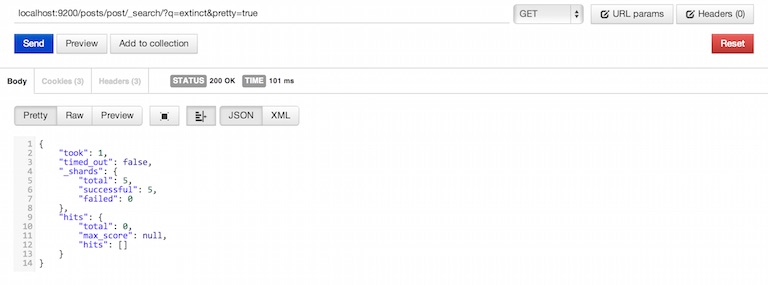

Lets search for something that doesn’t exist:

Note: Appending pretty=true to the query string makes Elastic Search pretty-print the output.

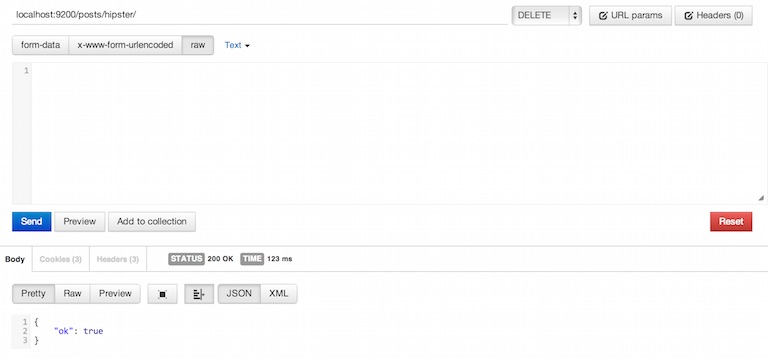

Deleting

Deletes are done via the DELETE verb.

DELETE http://localhost:9200/posts/post/1 will delete the post with id 1.

DELETE http://localhost:9200/posts/post will delete everything under the type post

DELETE http://localhost:9200/posts will delete everything under the index posts.

Next Steps

So we have set up Elastic Search and have covered how to perform basic CRUD. In the next chapter, we’ll extract data from the Ghost blog and index all of it into Elastic Search.

Keep commenting!